Why GP, NAV, and AX Manufacturers’ Data Is Not AI-Ready

Why GP, NAV, and AX Manufacturers’ Data Is Not AI-Ready

Going live on a new ERP platform without clean data is like spending a fortune on a new supercar and pouring sugar and sawdust into the gas tank.

It is an educated guess, but I will wager that the typical Microsoft Dynamics Great Plains (GP), NAV, and AX manufacturers' data is not AI or upgrade ready.

Let us look at a recent example of the data issues I have seen in my career helping companies select, implement, and fix ERP software projects.

I was advising a partner why his project could not go live when I found additional data issues.

The first issue was that the client transferred hundreds of thousands of routes to the new system. Simple math told me the required routings were closer to one hundred. It turns out that the production department had created a new, unique routing for every part ever made. So, the identical part produced multiple times was not tracked on the same route but rather on a new and unique route each time.

The second and equally significant data problem was the number of duplicate items I suspected were creating major junk-data problems in the item file.

The client creates many engineer-to-order parts supported by a large engineering staff. When I suggested that we analyze the item file for duplicates before importing them to the new system, the VP of engineering was highly insulted and outraged. He assured me that his item master was pristine with zero duplicates because of the tight controls in his department.

However, I have seen multiple engineering departments where the system used to create a new engineering bill of material made it easier to create a new part on the fly rather than find the part in the existing item file.

Prepared to be proven wrong and pleasantly surprised by this group of engineers' efficiency, I asked for an export of the item file. To get ready, I brushed up on Excel's Boolean logic capabilities to find potential duplicate parts. I could have saved myself time and effort.

When I opened the item master in Excel, a cursory scan of the first page of the items showed SEVEN different item numbers with THE same item description. A simple sort and review of duplicate item numbers showed hundreds of thousands of duplicates. In my experience, those are not unique dirty-data scenarios.

Poor data quality can significantly hinder the effectiveness of AI in forecasting and analysis within manufacturing. Here is a brief list of issues that may arise:

- Inaccurate Predictions : AI relies on historical data to make forecasts. If the data is flawed, predictions will be unreliable.

- Quality Control Problems : Bad data can lead to incorrect product quality assessments, resulting in undetected defects.

- Maintenance Challenges : Predictive maintenance becomes problematic if the data about machine performance is incorrect or incomplete.

- Operational Inefficiency : AI uses data to optimize processes. Poor data can lead to suboptimal decisions and processes.

- Adaptation Issues : Inability to adapt quickly to market changes due to unreliable data-driven insights.

- Decision-Making Errors : Strategic decisions based on poor data can lead to costly mistakes, especially around product costing and sales analysis.

These issues underscore the importance of ensuring high-quality data for AI applications in manufacturing. Simply eliminating duplicates is just the first and simple step of a sophisticated data cleansing project that would enable meaningful and valuable data.

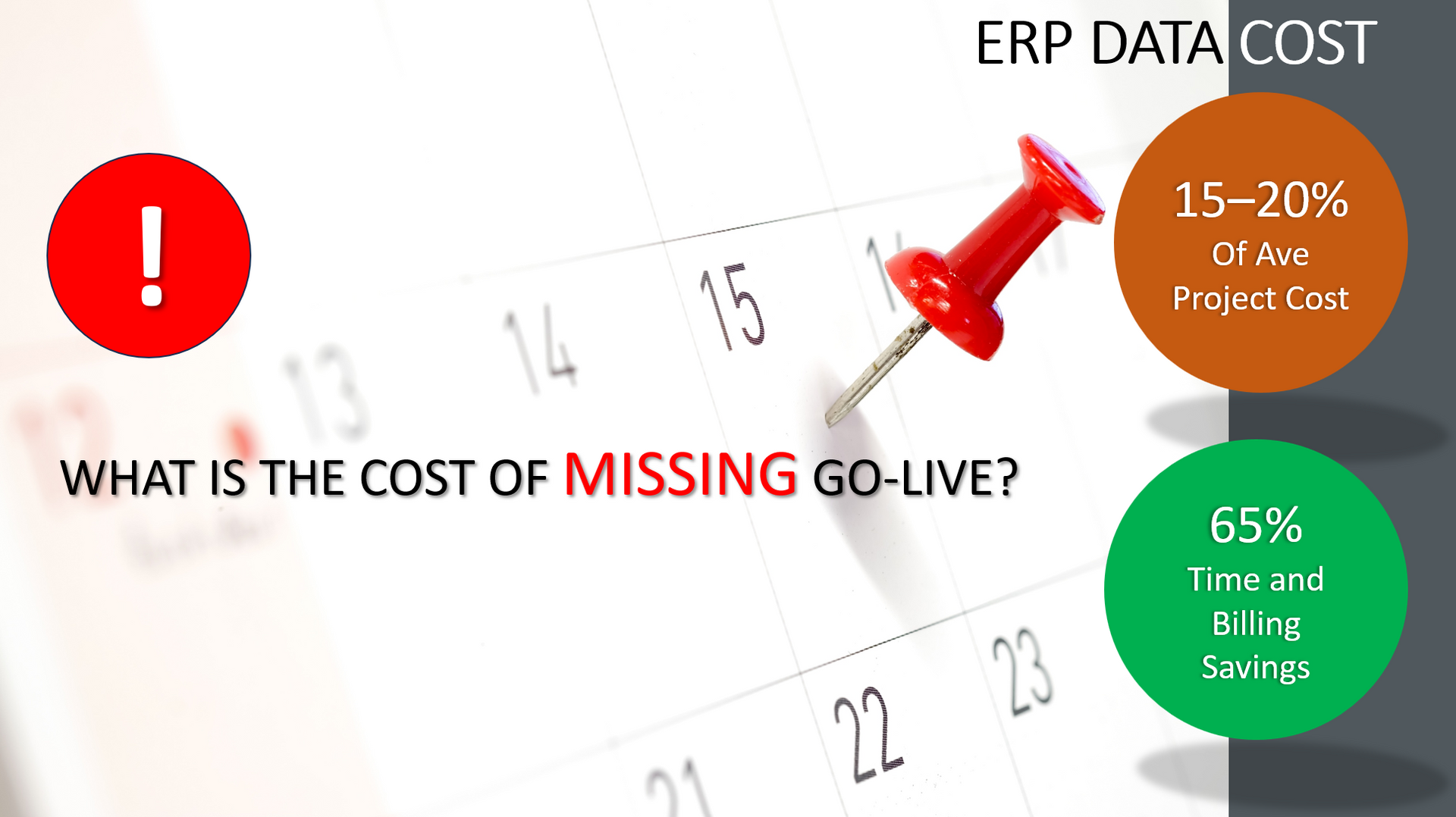

Pro-Tip: During the contract negotiation phase for the implementation of your new ERP platform, the time and money-saving suggestion is made that data can be migrated via Excel templates; expect massive change orders and a reschedule of your go-live date approximately two-thirds through your original budget and project time.

Call us for a meaningful conversation on how to clean and convert data to be AI-ready.

HandsFree ERP is dedicated to supporting clients with their ERP initiatives, enabling companies to seamlessly connect users with their ERP partners. By utilizing skilled professionals, streamlined processes, and cutting-edge tools, HandsFree ERP significantly boosts the success rates of ERP projects.